Update log 2025-04-08 08:00:00

GPT-4o | Multimodal AI Powerhouse

GPT-4o, from OpenAI, is a cutting-edge multimodal AI excelling in text, images, and more. With top-tier reasoning and a 128K token context, it outshines GPT-4 for diverse applications.

OpenAI o3-mini | Advanced Reasoning AI Model

OpenAI o3-mini is a fast, cost-efficient reasoning AI model excelling in STEM tasks. With 90% lower costs than o1, it offers superior coding and problem-solving capabilities.

Grok 3 | Insightful Reasoning AI Model

Grok 3, from xAI, is a powerful AI excelling in reasoning, truth-seeking, and real-world problem-solving. With a 128K token context, it rivals top models like GPT-4o and Claude 3.5.

Phi-4 | Efficient Reasoning AI Model

Phi-4, a 14B-parameter open-source AI from Microsoft, excels in reasoning, math, and coding. With a 16K token context, it rivals larger models like GPT-4o, optimized for low-resource environments.

Command A | Precision-Driven AI Model

Command A is a compact, high-precision AI model excelling in reasoning, task execution, and efficiency. With a 64K token context, it rivals models like Grok 2 and Phi-4 for targeted applications.

QwQ-32B | Compact Reasoning AI Model

QwQ-32B, from Alibaba’s Qwen Team, is a 32B-parameter reasoning AI excelling in math, coding, and logic. It rivals larger models like DeepSeek-R1 with a 131K token context window.

Gemini 2.5 Pro Experimental | Cutting-Edge Reasoning AI Model

Gemini 2.5 Pro Experimental, launched by Google, is a top-tier reasoning AI with a 1M token context window. It excels in coding, math, and multimodal tasks, outperforming rivals.

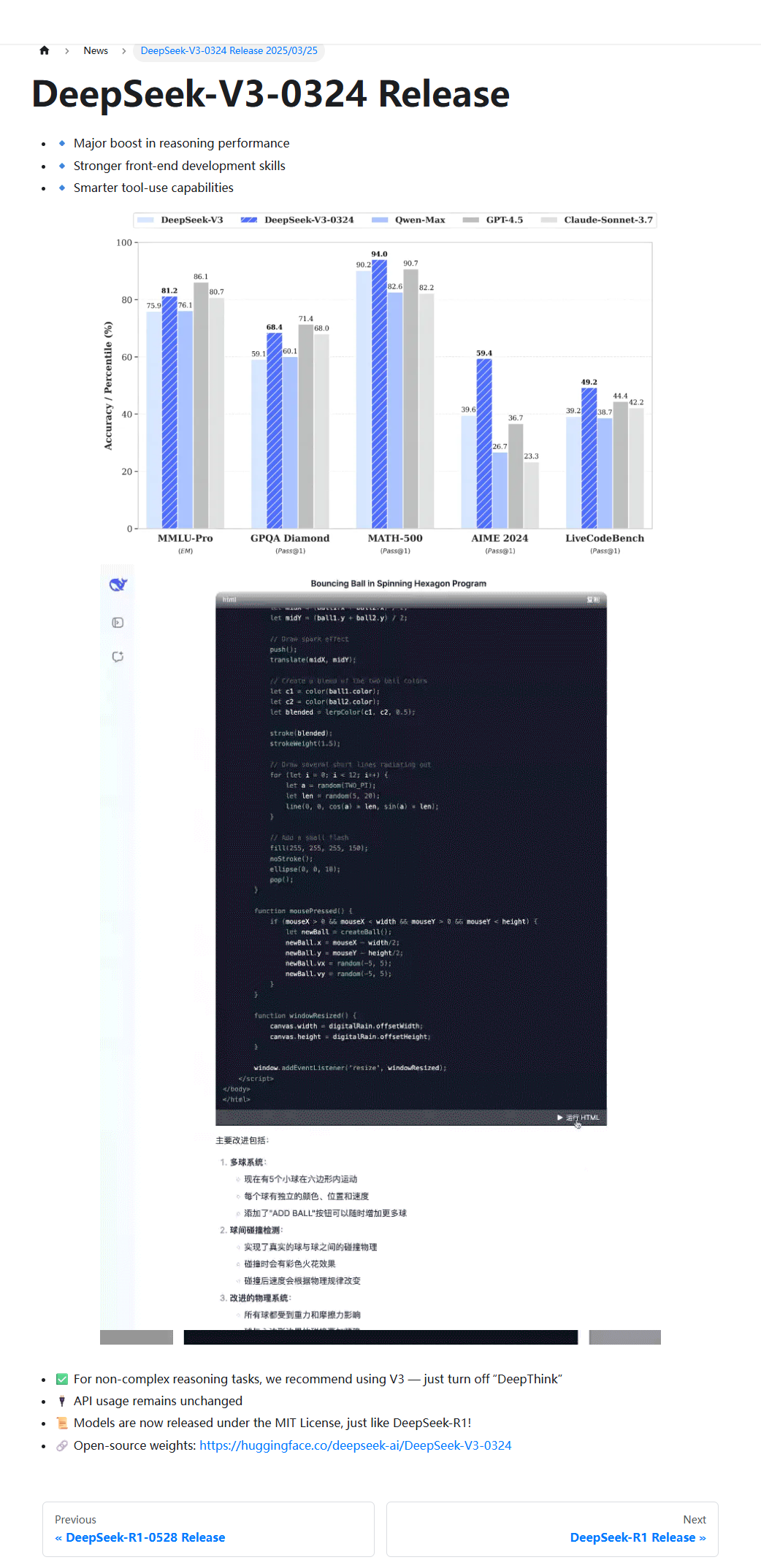

DeepSeek-V3 | Advanced AI Language Model

DeepSeek-V3 is a breakthrough mixed-expertise (MoE) language model with 67.1 billion total parameters, but only 3.7 billion parameters activated per token, making it highly efficient. Trained on 14.8 trillion tokens, it outperforms many open-source models and is comparable to leading closed-source models.

Sonar | High-Speed Reasoning AI Model

Sonar, from Perplexity, is a blazing-fast AI model optimized for search and reasoning. Built on Llama 3.3 70B, it delivers factual, readable answers with a 32K token context, outpacing rivals like GPT-4o mini.

© Copyright 2025 All Rights Reserved By Neurokit AI.